Gaze in Social Interaction

The eyes are a rich channel for nonverbal communication in our daily interactions. The appearance of the eyes has evolved to be especially visible to provide nonverbal cues to others. Unlike any other species, human eyes have a uniquely large, bright and clearly visible part of the eyes—the sclera. Our eye gaze has a dual function in social settings: to signal and to perceive. This increased conspicuousness of the eyes affords an observer the ability to discern one’s gaze direction easily, allowing for humans to engage in natural behaviours such as gaze following and joint attention.

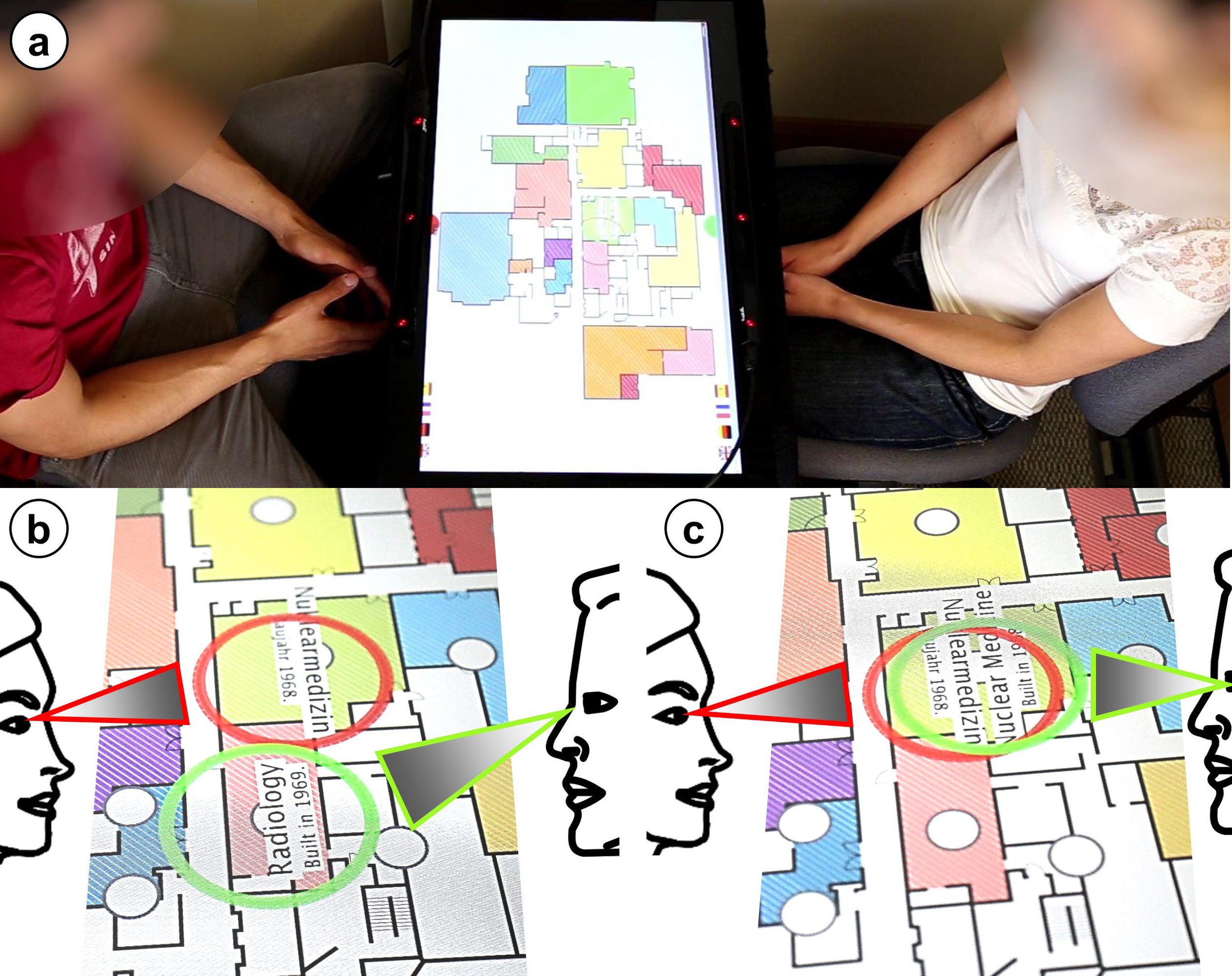

Gaze has been explored to improve nonverbal communication in collaborative multi-user interfaces. Gaze visualisation is by far the most common approach for creating ‘gaze awareness’ (knowing what someone is looking at). By overlaying live gaze visualisations onto the interface or the physical world, it provides a complementary layer of nonverbal communication to an observer. Gaze awareness is especially beneficial in remote settings where the eyes are typically absent.

In prior work, we have investigated gaze awareness in social interaction, from improving coordination and collaboration using gaze visualisation [1] to augmenting user experiences using implicit social gaze in VR [2]. While there are clear benefits for gaze sharing and gaze awareness, it is essential to acknowledge that our gaze behaviour changes in the presence of others. For example, in settings where the eyes are visible, it gives away our unspoken intentions to others. Therefore, in competitive settings, we can manipulate our gaze in deceptive ways to hide our intentions [3]. Whereas in collaborative settings, we can leverage known collaborative gaze behaviours (for example, joint attention) and begin to design multimodal interfaces to support them [4].

In GEMINI, we will investigate how gaze input can support multi-user interaction that augments natural human behaviour and is scalable.

Look Together: Using Gaze for Assisting Co-located Collaborative Search

Yanxia Zhang, Ken Pfeuffer, Ming Ki Chong, Jason Alexander, Andreas Bulling and Hans Gellersen

Personal and Ubiquitous Computing, 21, 1.The Royal Corgi: Exploring Social Gaze Interaction for Immersive Gameplay

Melodie Vidal, Remi Bismuth, Andreas Bulling, and Hans Gellersen

CHI '15: ACM Conference on Human Factors in Computing SystemsLooks Can Be Deceiving: Using Gaze Visualisation to Predict and Mislead Opponents in Strategic Gameplay

Joshua Newn, Fraser Allison, Eduardo Velloso and Frank Vetere

CHI ‘18: ACM Conference on Human Factors in Computing SystemsMulti-user Gaze-based Interaction Techniques on Collaborative Touchscreens

Ken Pfeuffer, Jason Alexander, and Hans Gellersen

ETRA '21: ACM Symposium on Eye Tracking Research and Applications